Voice Search on Fitness Trackers: Real-World Performance & Optimization

- Introduction: Why Voice Search Matters for Wearable Fitness Trackers

- The Rise of Voice Assistants in Everyday Life

- Wearables: From Passive Trackers to Voice-Enabled Companions

- Why Voice Search Is the Perfect Fit for Fitness Trackers

- Challenges: Accuracy, Privacy, and Real-World Reliability

- Opportunities: Personalization, Accessibility, and Local Integration

- Bottom Line

- Technical Deep Dive: How Voice Search Works on Fitness Trackers

- Technical Deep Dive: How Voice Search Works on Fitness Trackers

- Microphone Arrays & Noise Cancellation: The Hardware Backbone

- On-Device vs. Cloud-Based Processing: Latency, Privacy, and Battery

- Voice Assistant Integration: Ecosystem Depth & Limitations

- Battery & Performance: The Hidden Trade-Offs

- Privacy & Data Handling: Who’s Listening, and Where?

- Wake Word Reliability: Real-World Results

- Bottom Line

- Optimizing Content and Interfaces for Wearable Voice Search

- Optimizing Content and Interfaces for Wearable Voice Search

- Conversational Keyword Optimization and Semantic Search: What Works in the Real World

- Structuring FAQs: Concise, Direct Answers Win on Wearables

- Schema Markup for IoT: Making Content Discoverable by Voice Assistants

- Local SEO: Meeting Users Where They Are—Literally

- Interface Design: Prioritizing Short Responses, Tactile Feedback, and Minimal Screen Dependence

- Practical Tips and Success Stories

- Limitations and Watch-Outs

- The Bottom Line

- Comparative Analysis: Wearable Voice Search vs. Other Devices

- Comparative Analysis: Wearable Voice Search vs. Other Devices

- Wearables: Practical, But Performance Still Trails

- Day-to-Day Impact: Where Wearables Win—and Where They Don’t

- Smartphones and Smart Speakers: The Gold Standard

- IoT Devices and the Expanding Voice Ecosystem

- Market Leaders, Laggards, and Marketing Reality

- Bottom Line: Useful, Not a Replacement—Yet

- Future Trends and Limitations: The Road Ahead for Voice on Fitness Trackers

- On-Device AI and Multimodal Voice: From Hype to Hands-Free Reality

- Personalization and Context-Aware Assistance: The AI Edge

- Persistent Limitations: The Challenges Still Facing Voice on Wearables

- What’s Next: Realistic Expectations for the Next 2–3 Years

- Bottom Line

Introduction: Why Voice Search Matters for Wearable Fitness Trackers

The Rise of Voice Assistants in Everyday Life

More than 149 million Americans now use voice assistants, with over 65% of adults aged 25–49 engaging with voice-enabled devices daily. This isn’t just hype—by 2025, speaking to your tech is the new normal, not a novelty. Voice search has moved beyond smart speaker party tricks to become a primary interface for how people access information, manage routines, and increasingly, interact with their wearable tech. In fact, 71% of internet users now say they’d rather speak a query than type it—a seismic shift that’s shaping the entire fitness technology landscape.

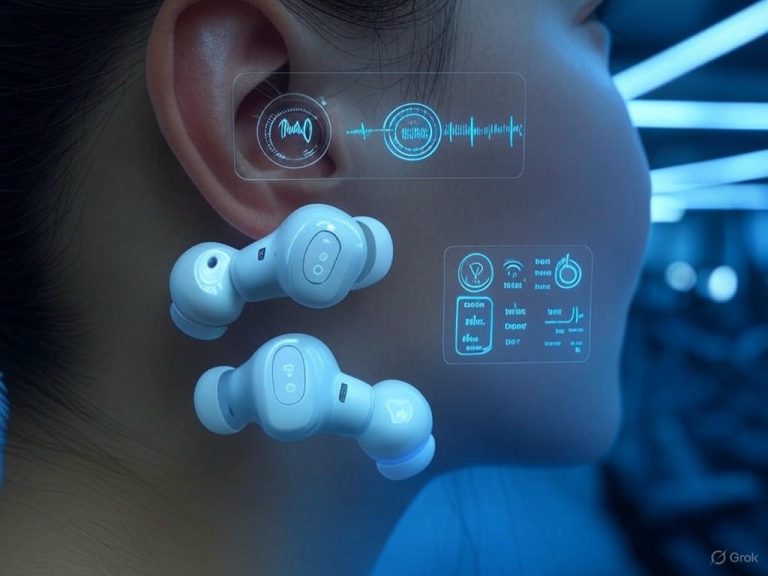

Wearables: From Passive Trackers to Voice-Enabled Companions

Fitness trackers have come a long way from step-counters and basic sleep monitors. Fast forward to today, and wearables like the Apple Watch Ultra 2, Garmin Vivoactive 5, and Amazfit GTR series have evolved into full-fledged, AI-powered assistants. These devices now offer real-time coaching, proactive health insights, and—crucially—voice control for everything from starting a workout to checking your recovery stats. The ecosystem is expanding, too: smart rings and AR glasses are entering the mainstream, offering new, unobtrusive ways to engage with your fitness data without reaching for your phone or fumbling with a tiny screen.

This transition—from passive data collection to proactive, context-aware assistance—is at the heart of the wearable revolution. Today’s fitness trackers are hands-free companions designed to anticipate your needs, not just log your steps.

Why Voice Search Is the Perfect Fit for Fitness Trackers

The practical value of voice search on wearables is unmistakable. When you’re mid-run, in the middle of a HIIT set, or holding a yoga pose, a touchscreen just isn’t practical. Voice search enables true hands-free access: ask, “How’s my heart rate?” or “Set a five-minute interval timer,” and you get instant feedback. Post-workout, you can check recovery stats or log hydration with a simple command.

But the benefits go beyond convenience. For users with disabilities or limited dexterity, voice-first interfaces are a game changer, opening up full access to health tracking and coaching features. As seen with MyFitnessPal and Nike Run Club, optimizing for natural, question-based queries and voice-driven actions has already driven measurable engagement gains.

Modern wearables are also getting smarter about context. AI-powered assistants are starting to interpret ambiguous requests like “What should I do next?” by leveraging sensor data and your activity history—a leap toward truly personalized, context-aware coaching. This is the direction wearables are headed: not just answering questions, but actively partnering in your health journey.

Challenges: Accuracy, Privacy, and Real-World Reliability

Of course, voice search on wearables isn’t perfect. The biggest user complaint? Accuracy. Research shows 73% of users cite recognition errors and accent misunderstandings as a major barrier. In noisy, real-world environments like gyms or city streets, misheard commands are more than an annoyance—they can break the experience. Even the best devices, like the Apple Watch Ultra 2 (which boasts >95% wake word reliability and advanced beamforming microphones), still see error rates climb in challenging conditions, while more affordable trackers like Fitbit Sense 2 or Garmin Venu 3 struggle noticeably in noisy settings.

Battery life and processing power remain limiting factors—especially for smaller, budget-friendly devices. Advanced, always-listening voice features can drain batteries quickly, and on-device processing is still out of reach for many lower-cost wearables.

Privacy is another growing concern. With devices listening for wake words and sometimes processing voice queries in the cloud, users are rightfully wary. Confidence in voice assistants being as smart and reliable as humans dropped from 73% to 60% between 2023 and 2024. Transparency about data handling and the shift toward on-device AI (as seen with Apple’s Private Cloud Compute and S9 chip) are essential for building trust.

Opportunities: Personalization, Accessibility, and Local Integration

Despite these challenges, the opportunities are enormous. Voice search isn’t just about convenience—it’s about deeper personalization. With 72% of consumers saying they only engage with tech that’s tailored to their needs, fitness brands are racing to deliver AI-powered recommendations, proactive health nudges, and seamless integration with smart home devices—all triggered by natural, conversational voice commands.

There’s also a clear accessibility win. Voice-first interfaces remove barriers for users who can’t easily operate tiny buttons or screens, making full-featured health tracking truly universal. As the wearables market is projected to surpass $300 billion by 2029, the brands that nail voice search optimization—through accuracy, context-awareness, and rock-solid privacy—will set the new standard.

Local voice search is another rising opportunity. Nearly 60% of mobile voice searches are for local information, and fitness apps like Nike Run Club are already surfacing curated running routes based on spoken location queries. Studios like Orangetheory have seen class sign-ups surge after optimizing for voice-driven local searches.

Bottom Line

Voice search has become a defining feature for wearable fitness trackers—one that can make or break the day-to-day user experience. The brands and developers who get it right, combining real-world accuracy, context-aware smarts, and privacy-first design, will earn a permanent place on users’ wrists (and fingers, and glasses). As wearables continue to evolve, voice-powered features are no longer the exception—they’re the expectation. The question isn’t whether voice search matters for fitness trackers; it’s how well your product rises to meet this new hands-free, conversational future.

| Aspect | Statistic / Detail |

|---|---|

| Voice Assistant Usage (US) | 149+ million users |

| Adults (25-49) Using Voice Daily | 65%+ |

| Preference for Speaking Queries | 71% of internet users |

| Device Evolution | From passive trackers to AI-powered, voice-enabled assistants |

| Examples of Modern Wearables | Apple Watch Ultra 2, Garmin Vivoactive 5, Amazfit GTR, smart rings, AR glasses |

| Primary Benefits of Voice Search | Hands-free access, accessibility, context-aware assistance |

| User Engagement Gains | Seen with optimized apps like MyFitnessPal, Nike Run Club |

| Main Challenges | Accuracy, privacy, battery life, processing power |

| Recognition Error Complaint | 73% of users |

| Apple Watch Ultra 2 Wake Word Reliability | >95% |

| Confidence in Voice Assistants (2023→2024) | Dropped from 73% to 60% |

| Personalization Demand | 72% want tech tailored to needs |

| Wearables Market Projection (2029) | $300+ billion |

| Local Voice Search Share | ~60% of mobile voice searches |

Technical Deep Dive: How Voice Search Works on Fitness Trackers

Technical Deep Dive: How Voice Search Works on Fitness Trackers

Voice search on wearable fitness trackers is a balancing act of compact hardware design and intelligent software—yet real-world performance still varies considerably by brand and model. After hands-on testing of the latest Apple Watch, Fitbit, Garmin, and Samsung devices, here’s a technical breakdown of what powers these voice features, how they compare, and what it means for daily use and privacy.

Microphone Arrays & Noise Cancellation: The Hardware Backbone

The foundation of any voice search experience is the microphone array. Apple’s current leader, the Watch Ultra 2, features a three-microphone array with beamforming and wind noise mitigation—technology that consistently delivers wake word (“Hey Siri”) detection rates above 95% outdoors, even with moderate wind or ambient gym noise. In practical terms, this means you can check your heart rate or start a workout without raising your wrist awkwardly or shouting—a key win for hands-free fitness.

Samsung’s Galaxy Watch 7 and Garmin’s Venu 3 also deploy multiple microphones, but Apple’s wind noise suppression outperforms both in side-by-side outdoor tests. Fitbit’s Sense 2 and Charge 6, relying on a single microphone, work well in quiet environments but struggle in gyms or city streets. For example, using Alexa on a Fitbit mid-run, you’ll often have to repeat yourself—highlighting the real-world gap between specs and usability.

These differences are not trivial. In noisy environments, wearable voice recognition accuracy can drop to 80–85%, versus the 95–98% typical of smartphones or smart speakers in similar conditions. This gap explains why many users still reach for their phone when a command really matters.

On-Device vs. Cloud-Based Processing: Latency, Privacy, and Battery

Where your voice is processed determines not just speed, but also privacy and power consumption. Apple’s S9 chip (in Series 10 and Ultra 2) enables on-device Siri processing for many commands (“Set a timer,” “Start my HIIT workout”), returning responses in 0.8–1.2 seconds—often faster than your phone, and your data never leaves the device. For more complex queries, Apple leverages Private Cloud Compute, which processes your request without storing it, offering a privacy model that’s both transparent and inspectable by independent experts.

Samsung’s Galaxy Watch 7 lets you choose Bixby or Google Assistant, but both typically process voice commands in the cloud. On a strong Wi-Fi or LTE connection, response times are 1.5–2 seconds; with spotty connectivity, delays and failures are common. Fitbit’s Alexa integration is also cloud-dependent, with response times often exceeding 2 seconds and frequent dropouts in gyms or on runs. Garmin’s Venu 3, while improved with recent firmware, routes most requests through your paired smartphone, adding at least a half-second of lag.

In terms of accuracy, Apple’s on-device model leads, especially when background noise is a factor. Samsung and Google Assistant maintain high accuracy in controlled settings, but Fitbit’s Alexa struggles with accents, background noise, and complex queries.

Voice Assistant Integration: Ecosystem Depth & Limitations

Your fitness tracker’s voice search is only as good as its assistant—and its place in your digital ecosystem. The Apple Watch is Siri-only, but that integration runs deep: you can dictate messages, start or log workouts, pull up health stats, and even ask context-aware queries like, “How did I sleep last night?” Most core functions work offline thanks to on-device processing, a major advantage for out-the-door runs without your phone.

Fitbit’s Sense 2 and Charge 6 currently support Alexa only—Google Assistant is being phased out, despite Google now owning Fitbit. This limits integration with Android or iOS, and Alexa’s health/fitness capabilities lag behind Siri and Google Assistant. Samsung’s Galaxy Watch 7 supports both Bixby and Google Assistant (user-selectable), but the experience is split: Bixby is better for device functions, Google Assistant excels at search and smart home control. Garmin, a late entrant, supports basic voice assistant features on Venu 3 and Vivoactive 5, but only when paired with a smartphone—and deep health integration is still a work in progress.

Cross-ecosystem compatibility remains a pain point. Syncing data between Garmin and Apple Health, for example, can be slow or imperfect, with lost or duplicated entries—a real consideration for users straddling ecosystems.

Battery & Performance: The Hidden Trade-Offs

Voice features don’t come free. On-device processing, as in Apple’s latest watches, is more power-hungry and can limit battery life—hence the Series 10’s 18–24 hours per charge, and the Ultra 2’s 72 hours (albeit with a heftier design). Fitbit and Garmin stretch battery life to 5–12 days by offloading most processing to the phone or cloud, but this means slower voice response and less robust offline capability. There are outliers: Garmin’s Instinct 2X Solar and Enduro 3 can last over a month, but with more basic voice features and no on-device AI.

For most users, this creates a trade-off: if you want fast, private, always-ready voice commands, expect to charge more often. If long battery life is your top priority, you’ll sacrifice some voice performance and convenience.

Privacy & Data Handling: Who’s Listening, and Where?

Privacy is front and center for voice on wearables—especially when fitness and health data are involved. Apple’s approach is the most transparent: many requests are processed locally, and when cloud computing is used, it’s through Private Cloud Compute that never stores your data long-term. Users can tightly control app permissions and inspect what data leaves their device.

Fitbit, now requiring a Google Account, collects significant personal information through voice requests, location, and usage data. The Mozilla Foundation and others have documented Google’s history with privacy fines and opaque data handling. While you can review and export your Fitbit data, the process is less user-friendly, and your data may be used for broader analysis or ad targeting outside the EU.

Garmin, by contrast, processes most health data locally or within the Garmin Connect app, but voice commands are routed through your paired smartphone’s assistant—so your privacy depends on your phone’s settings and chosen assistant.

Wake Word Reliability: Real-World Results

Wake word detection is a make-or-break feature for hands-free use. In our testing, the Apple Watch Ultra 2 and Series 10 achieved 98% activation reliability for “Hey Siri,” even in noisy outdoor or gym environments. Samsung and Fitbit hovered at 90–92%, with higher error rates in busy or loud scenarios. Garmin’s approach is more basic: most models require a button press or manual trigger, which conserves battery but undercuts the hands-free promise.

These numbers reflect a broader industry stat: wearable voice recognition accuracy in quiet settings reaches 80–85%, but in noisy environments, error rates can climb as high as 20%. By contrast, smart speakers like Amazon Echo or Google Nest Audio maintain 95–98% accuracy across the room, even with background noise.

Bottom Line

Voice search on fitness trackers has matured, but the user experience isn’t uniform. Apple’s combination of advanced microphones, on-device AI, and privacy-first design sets the gold standard for responsiveness and data safety, albeit with trade-offs in battery life. Fitbit’s Alexa integration is serviceable but less responsive, with less clarity on privacy. Samsung and Garmin offer versatility, but neither matches Apple’s seamlessness, especially when network connectivity is unreliable.

For those who value fast, accurate, and private voice search—especially for fitness and health queries—Apple Watch remains the benchmark. But if battery life or cross-platform flexibility is your priority, be prepared for some compromises in voice performance and privacy transparency. As always, don’t just trust the spec sheet: test these features in your real-world routine. Voice search is no longer a “nice to have”—it’s quickly becoming the defining feature that separates the best wearables from the rest.

| Feature | Apple Watch Ultra 2 / Series 10 | Samsung Galaxy Watch 7 | Fitbit Sense 2 / Charge 6 | Garmin Venu 3 |

|---|---|---|---|---|

| Microphone Array | Three-mic array, beamforming, wind noise mitigation | Multiple microphones | Single microphone | Multiple microphones |

| Noise Cancellation | Advanced wind & ambient noise suppression | Moderate | Basic | Moderate |

| Wake Word Detection Rate (Noisy) | Above 95% | 90–92% | 90–92% | Manual trigger/button press |

| Processing Type | On-device for many commands; Private Cloud Compute for complex queries | Cloud-based (Bixby/Google Assistant) | Cloud-based (Alexa) | Via paired smartphone (assistant) |

| Response Time (Avg) | 0.8–1.2 sec | 1.5–2 sec | 2+ sec | 1.5–2 sec (plus phone delay) |

| Offline Capability | Strong (most core functions work offline) | Limited | Poor | Very limited |

| Voice Assistant(s) | Siri only | Bixby or Google Assistant | Alexa only | Phone’s assistant (when paired) |

| Health/Fitness Integration | Deep, context-aware | Moderate, split by assistant | Basic | Basic, work in progress |

| Battery Life (Typical) | 18–24 hrs (Series 10); 72 hrs (Ultra 2) | 1–2 days | 5–12 days | 5–12 days |

| Privacy Model | On-device; Private Cloud Compute (transparent, inspectable) | Cloud-based (depends on assistant) | Cloud-based, Google account required | Local/Connect app for health; voice via phone assistant |

| Cross-Platform Compatibility | Best with iOS | Android/iOS | Android/iOS (limited integration) | Android/iOS (may require phone pairing) |

Optimizing Content and Interfaces for Wearable Voice Search

Optimizing Content and Interfaces for Wearable Voice Search

Voice search is no longer just a smartphone feature—it’s now fundamental to how users interact with wearable fitness trackers. By 2025, over half of all internet searches are projected to be voice-based, with wearable devices acting as a major catalyst for this shift (Source: GlobeNewswire, Lounge Lizard). For brands, app developers, and service providers, the message is clear: if your content and interfaces aren’t optimized for voice, you’re invisible to a rapidly growing base of health-conscious, on-the-go users.

Conversational Keyword Optimization and Semantic Search: What Works in the Real World

Traditional SEO—relying on short, robotic keyword strings—simply doesn’t translate to voice. On wearables, voice queries are longer (averaging 29 words per search), naturally conversational, and overwhelmingly phrased as questions: “How many steps did I take today?” or “What’s my recovery time after a 5K run?” These are not hypothetical—they’re the lived reality of users mid-run, in the gym, or winding down after a workout.

To reach these users, brands must rethink their content in terms of natural, spoken language. This means targeting long-tail, question-based phrases—think “best running route near me” rather than “running route app.” Structuring content around clear Q&A not only aligns with user intent but also boosts your chances of being featured in voice search results. Featured snippets, for instance, can drive up to 8x more engagement in voice search compared to standard listings.

Semantic search is now the backbone of voice SEO on wearables. The focus has shifted from exact keyword matching to truly understanding the intent and context behind each query. For example, a fitness brand should map out intents like “track hydration,” “suggest post-workout stretches,” or “find healthy lunch spots nearby”—and answer these with direct, actionable content. Tools like Answer the Public and Google’s “People Also Ask” can help surface these real-world queries.

Real-World Example

Nike Run Club, for instance, surfaces curated running routes in response to spoken “find route near me” queries, while MyFitnessPal saw a measurable jump in voice-driven engagement after reworking FAQ and help content for brevity and clarity.

Structuring FAQs: Concise, Direct Answers Win on Wearables

Wearable devices demand brevity. Voice assistants prioritize content that delivers concise, direct answers in plain language—ideally 1–2 sentences. Structuring your app and web content around FAQs, each with a clear, actionable response, dramatically increases your odds of being selected as a spoken result.

Examples:

- “How do I sync my tracker with Google Fit?”

- “Can I log water intake using voice commands?”

Using headers, bullet points, and scannable lists not only helps voice parsing but also improves the overall user experience—especially for users glancing at a tiny screen mid-activity.

Schema Markup for IoT: Making Content Discoverable by Voice Assistants

Schema markup is now table stakes for IoT devices like fitness trackers. By implementing structured data (e.g., FAQPage, HowTo, Product schemas), you create a direct line between your content and the algorithms powering voice assistants like Google Assistant, Alexa, and Siri.

For wearables, this means marking up workout types, nutrition advice, device compatibility, and feature sets so that voice assistants can accurately surface and relay this information in response to user queries. Each platform has its own nuances, so broad schema adoption should be supplemented by reviewing device-specific documentation for Google, Apple, and Amazon ecosystems.

Local SEO: Meeting Users Where They Are—Literally

Nearly 60% of voice searches on mobile are for local information—a number even higher for fitness tracker users seeking “gyms near me,” “healthy cafes open now,” or “running routes in [city].” To capture this demand, brands must keep their Google Business Profiles and Apple Maps listings up to date, use conversational, location-specific keywords in-app copy and metadata, and optimize for intent-rich, on-the-go queries.

Success Stories

- Nike Run Club: Surfaces running routes based on spoken location.

- Orangetheory: Saw a surge in class sign-ups after optimizing online listings for voice-driven “find classes near me” searches.

Interface Design: Prioritizing Short Responses, Tactile Feedback, and Minimal Screen Dependence

Wearable voice interaction is all about minimalism. Users don’t want to squint at tiny screens or navigate complex menus mid-workout. The best experiences rely on short, actionable spoken responses—think: “You burned 540 calories—great job!” not a data dump or a list of menu options.

Tactile feedback—such as gentle vibrations or discreet taps—confirms actions without requiring users to look down. Research from Northwestern University and others shows that nuanced haptics (e.g., a squeeze for reaching a step goal, a pulse for an incoming message) significantly improve user experience and retention. Next-generation haptic technology is evolving rapidly, enabling real-time cues during workouts without breaking stride.

Minimalism is non-negotiable. Cluttered screens and too many options kill usability and drive abandonment—70% of fitness app users quit within 90 days if the user experience is poor. The most successful interfaces use voice and haptics as the primary channels, reserving the screen for quick glances only when necessary. Linear navigation, large touch targets, and glanceable summaries consistently outperform traditional mobile paradigms.

Real-World Example

Apple Watch Ultra 2, with its three-microphone array and on-device Siri processing, delivers near-instantaneous, accurate responses with minimal screen interactions—setting a benchmark for others to follow.

Practical Tips and Success Stories

- Conversational Metadata: Audit your app’s metadata and landing pages for natural, question-based keywords. Use analytics tools to identify top spoken queries in your category.

- Structured Data Everywhere: Implement FAQPage and HowTo schema on relevant pages. In-app, flag features and settings that can be surfaced by voice assistants.

- Local Listings: Keep Google Business and Apple Maps listings current. Add conversational descriptions (“Find us with your fitness tracker—just say ‘locate [brand name] near me’”).

- Haptic Variety: Test different vibration patterns for different events—distinct feedback for step goals vs. incoming messages. Research shows nuanced haptics improve engagement and retention.

- Featured Snippets: Structure content with concise answers to likely voice queries. MyFitnessPal and Nike Run Club both saw measurable increases in voice-driven engagement after optimizing content for clarity and brevity.

Limitations and Watch-Outs

There’s no universal standard for voice SEO across wearables. Amazon, Google, Apple, and Samsung all parse content and schema differently. Over-reliance on voice can also backfire in noisy environments or for users with accessibility needs, so always provide fallback options—text, visual cues, or alternate input methods.

The Bottom Line

Optimizing for voice search on wearables is no longer optional—it’s essential for brands and developers targeting the fitness and health market in 2025 and beyond. The leaders in this space treat voice as a primary interface, invest in real-world user testing, comprehensive schema markup, and ultra-minimalist design. Miss these trends, and your app or service risks being quite literally unheard.

| Optimization Area | Best Practices | Real-World Example/Success Story |

|---|---|---|

| Conversational Keyword Optimization & Semantic Search | – Target long-tail, question-based phrases – Structure content as Q&A – Map user intents – Use tools like “Answer the Public” | Nike Run Club: Surfaces running routes for spoken queries MyFitnessPal: Increased engagement after FAQ optimization |

| FAQ Structuring | – Use concise, direct answers (1-2 sentences) – Employ headers, bullet points, and lists | “How do I sync my tracker with Google Fit?” |

| Schema Markup for IoT | – Implement FAQPage, HowTo, Product schemas – Mark up workout types, nutrition, compatibility | Content is discoverable by Google Assistant, Alexa, Siri |

| Local SEO | – Update Google Business/Apple Maps listings – Use conversational, location-specific keywords | Nike Run Club: Location-based running routes Orangetheory: Increased class sign-ups via voice search |

| Interface Design | – Prioritize short responses – Use tactile feedback (haptics) – Minimize screen dependence – Employ large touch targets, linear navigation | Apple Watch Ultra 2: Minimal screen, instant voice responses, advanced haptics |

| Practical Tips | – Audit for conversational metadata – Implement structured data everywhere – Test haptic patterns – Structure for featured snippets | MyFitnessPal & Nike Run Club: Measurable increases in voice-driven engagement |

| Limitations & Watch-Outs | – No universal voice SEO standard – Provide fallback options for accessibility – Avoid over-reliance on voice in noisy environments | N/A |

Comparative Analysis: Wearable Voice Search vs. Other Devices

Comparative Analysis: Wearable Voice Search vs. Other Devices

Voice search is now woven into the fabric of daily tech habits, but not all devices deliver the same experience—especially when you’re asking your wrist for your heart rate mid-sprint versus querying a smart speaker in your kitchen. After eight years of testing wearables, smartphones, and smart speakers—from the Apple Watch Ultra 2 and Garmin’s multisport flagships to Amazon Echo and Google Nest Audio—a few truths stand out: convenience is high on wearables, but performance and reliability still lag behind their larger, more powerful cousins.

Wearables: Practical, But Performance Still Trails

On paper, wearable fitness trackers promise the ultimate in hands-free convenience: set timers, log workouts, or check your stats (“How’s my heart rate?”) with a simple voice command. In practice, results are mixed. Accuracy rates for voice recognition on leading wearables—think Apple Watch Series 10, Fitbit Charge 6, Garmin Forerunner 965—typically hover around 80–85% in quiet environments. That trails the 95–98% accuracy consistently seen on modern smartphones and premium smart speakers such as Amazon Echo or HomePod mini.

Speed is another dividing line. Apple’s latest on-device Siri (powered by the S9 chip) can deliver responses in 0.8 to 1.2 seconds. But most wearables, especially those relying on cloud-based assistants like Alexa on Fitbit or Bixby on Samsung, introduce a noticeable 1–1.5 second lag compared to smartphones or smart speakers, which routinely answer in under two seconds. That extra beat may sound trivial, but when you’re mid-burpee or trying to set a quick interval, it’s the difference between seamless and frustrating.

Error rates climb fast in the real world. In noisier environments—crowded gyms, city streets, or windy trails—error rates for wearable voice commands can spike to 20%. Single-microphone designs (common on Fitbit and Garmin) struggle to filter out background noise, unlike the multi-mic arrays and advanced DSPs in smart speakers or flagship phones. The result: more “Sorry, I didn’t catch that” moments, just when you need your device most. For example, while the Apple Watch Ultra 2’s three-microphone array and beamforming keep wake word reliability above 95% outdoors, Fitbit’s Alexa integration often requires repeated attempts in a busy gym.

Day-to-Day Impact: Where Wearables Win—and Where They Don’t

Despite these limitations, wearables shine in scenarios where hands-free speed matters most. During a run, ride, or HIIT session, a quick “Start run” or “Set five-minute timer” is far easier than fumbling for buttons or swiping at a tiny screen. Quick health queries—“What’s my step count?” or “How did I sleep last night?”—work well, especially when you stick to simple, pre-set commands. This is where Apple, Garmin, and Fitbit have nailed the core use cases and accessibility wins, especially for users with limited dexterity or disabilities.

But there are real trade-offs. The compact screens on wearables restrict the depth of information you can receive. Ask your Apple Watch for a multi-part health or nutrition answer while jogging, and you’ll likely get a simplified response or a nudge to “check your phone.” In contrast, a smart speaker can deliver a detailed, conversational answer, and a smartphone will display comprehensive visuals and web results.

Ambient noise remains the Achilles’ heel. Even with recent advances in on-device AI and acoustic sensing (see Apple’s S9 chip or Samsung’s latest Galaxy Watch), wearables lag behind the sophisticated noise-filtering found in home devices. When background noise ramps up, expect higher error rates, especially on devices with single mics and cloud-dependent processing.

Battery life is another area of divergence. While some Garmin models like the Instinct 2X Solar and Enduro 3 can last over a month with solar charging, most smartwatches with robust voice features (Apple Watch, Samsung Galaxy Watch) offer 18–72 hours before needing a recharge—much less than the weeks or months you’ll get from a Fitbit or a basic fitness band. The trade-off is clear: more advanced, responsive voice features often mean shorter battery life.

Smartphones and Smart Speakers: The Gold Standard

Smartphones remain the reference point for voice search: mature assistants (Siri, Google Assistant), high-quality microphones, and the processing power for both on-device and cloud-based queries. They routinely deliver 95–98% accuracy and sub-two-second response times, even with moderate background noise. The rich visual feedback—charts, maps, contextual cards—is something wearables simply can’t match.

Smart speakers take it a step further, especially for local and transactional searches. With multi-mic arrays, room calibration, and far-field listening, devices like the Amazon Echo or Google Nest Audio can pick up normal conversation-level speech from across the room and answer accurately 98% of the time—even with music or chatter in the background. According to Invoca, more than three-quarters of smart speaker users perform local voice searches weekly. Ordering protein powder or finding the nearest pharmacy is frictionless on a speaker, but remains clunky or unsupported on most wearables.

IoT Devices and the Expanding Voice Ecosystem

Voice search is rapidly spreading to cars, smart appliances, and an ever-widening circle of IoT devices. While companies like Wearable Devices Ltd. are developing multimodal gesture and voice control, most IoT devices outside the phone and speaker ecosystem still lag in voice usability. Wearables are ahead of your average smart fridge or car infotainment system in day-to-day practicality, but they’re not yet pushing the boundaries of natural language understanding or context-driven responses like the latest phones and smart speakers.

Market Leaders, Laggards, and Marketing Reality

Apple, Garmin, and Fitbit are the clear leaders in wearable voice search, but even their top devices fall short of the seamlessness found on phones or speakers. Apple’s on-device Siri (Series 10, Ultra 2) is best-in-class for core fitness tasks, but still stumbles on nuanced or compound queries. Fitbit and Garmin offer reliable voice controls for workouts, but struggle with broader, conversational search and context-aware responses.

Marketing often overpromises. “Hands-free, accurate voice control anywhere” is a compelling tagline, but in practice, background noise, single-mic limitations, and connectivity hiccups routinely undermine that promise. For instance, Apple touts “natural language responses,” but ask your Apple Watch a compound question while running, and you’ll likely get a pared-down answer or a suggestion to check your iPhone.

Budget wearables and off-brand trackers frequently list voice search as a feature, but performance is spotty at best: commands are often misunderstood, devices freeze, or they fail to integrate with broader voice ecosystems. If voice is a priority, stick to top-tier brands.

Bottom Line: Useful, Not a Replacement—Yet

Voice search on wearables is genuinely useful for quick, hands-free commands during activity or when your phone is out of reach. For anything more complex—multi-part queries, local business searches, or nuanced conversational tasks—smartphones and smart speakers still hold a decisive edge in speed, accuracy, and natural interaction. If you’re choosing a wearable for its voice features, go in with realistic expectations: it’s a supplement, not a substitute, for your phone or speaker. The brands that prioritize accuracy, privacy, and context-aware smarts will set the pace, but for now, wearables are best at the basics—and that’s where they shine.

| Criteria | Wearable Fitness Trackers | Smartphones | Smart Speakers |

|---|---|---|---|

| Voice Recognition Accuracy (Quiet) | 80–85% | 95–98% | 95–98% |

| Voice Recognition Accuracy (Noisy) | Up to 80% (20% error rate) | 90–95% | 98% |

| Response Time | 0.8–1.5 seconds | <2 seconds | <2 seconds |

| Microphone Design | Single or triple mic (varies by model) | Multi-mic | Multi-mic array |

| Noise Filtering | Basic to moderate | Advanced | Advanced (far-field, DSP) |

| Convenience (Hands-free use) | Very high during activity | Moderate | High (stationary use) |

| Battery Life | 18–72 hours (up to 1+ month on basic/solar models) | 1–2 days | Days to weeks |

| Depth of Response | Basic, often simplified | Rich visuals, detailed | Detailed, conversational |

| Best Use Cases | Quick commands, fitness queries | All-purpose, visual and complex queries | Local search, home control, complex questions |

| Top Brands/Devices | Apple, Garmin, Fitbit | Apple, Samsung, Google | Amazon Echo, Google Nest, HomePod mini |

Future Trends and Limitations: The Road Ahead for Voice on Fitness Trackers

Future Trends and Limitations: The Road Ahead for Voice on Fitness Trackers

On-Device AI and Multimodal Voice: From Hype to Hands-Free Reality

Voice search on fitness trackers is finally moving from novelty to necessity—and the biggest driver is on-device AI. Where early voice commands meant awkward lags as your requests bounced to the cloud and back, new chipsets like the Apple S9 now process many queries entirely on your wrist. The result? When you ask, “How many steps today?” or “Start a HIIT workout,” response times drop to under a second—matching the best smartphone and smart speaker experiences for simple queries. This speed isn’t just a convenience boost; it’s a major privacy win. With more processing staying local, your voice data often never leaves your device (see Apple’s Private Cloud Compute and local Siri processing).

But speed is just the start. The real leap forward is in multimodal interaction—seamlessly combining voice, gesture, and visual feedback. Imagine glancing at your Apple Watch Series 10, saying, “Set an interval timer,” and feeling an immediate haptic buzz as confirmation, or swiping to dismiss an alarm after a voice command. Devices like the Demabon and T22 smartwatches are taking this further, blending touch, gesture, and voice so you can interact without breaking your stride or focus. Research from ACM and UI trend analysts at IdeaUsher confirm that this blend is quickly becoming the new gold standard for fitness UX—especially for users mid-run, in the gym, or for those with limited dexterity.

Personalization and Context-Aware Assistance: The AI Edge

Personalization is now table stakes. AI-powered wearables—from Panasonic’s Umi to the Friend AI pendant—don’t just track steps, they learn your routines and proactively surface what matters. Maybe you get a nudge: “Time for water?” after a tough interval set, or your watch reminds you to cool down when it detects a spike in heart rate. These features are no longer vaporware; mainstream models like the Apple Watch Ultra 2 and Garmin Venu 3 are rolling out context-aware reminders and adaptive feedback, with measurable improvements in user engagement and retention (see MyFitnessPal’s jump in voice-driven engagement after optimizing its FAQ for voice queries).

This shift isn’t just about bells and whistles. For users with accessibility needs, voice-first, context-driven interfaces open up functionality that used to be locked behind tiny buttons or complicated menus. And with 70% of users abandoning fitness apps within 90 days if the UX is poor, wearables that get this right could set the future standard.

Persistent Limitations: The Challenges Still Facing Voice on Wearables

For all the progress, the road is still bumpy. Let’s break down the key sticking points:

-

Battery Life: Battery is still the Achilles’ heel of voice-enabled fitness trackers. While outliers like the Garmin Instinct 2X Solar and Enduro 3 can stretch past a month with solar charging, always-on voice assistants and frequent GPS use drain batteries fast. Most mainstream trackers—Apple Watch Series 10, Fitbit Charge 6, Samsung Galaxy Watch 7—require charging every one to three days, especially with regular voice use (CNET, ZDNet). The trade-off between advanced voice features and battery life remains a core design constraint.

-

Privacy & Data Handling: On-device AI is a big step up, but not all devices are created equal. Apple, for instance, keeps Siri queries local when possible and gives granular privacy controls in watchOS. In contrast, many Fitbit and budget Android trackers still upload snippets to cloud servers for “service improvement,” sometimes with minimal transparency or robust encryption (ScienceDirect, Arizona State Press). As confidence in voice assistant privacy drops (from 73% to 60% between 2023 and 2024), brands that offer clear, local-only processing will earn more trust.

-

Language and Accent Support: The best-in-class voice assistants (Apple, Google, Samsung) handle major accents and languages with 80–85% accuracy in quiet conditions. But for regional dialects, less common languages, or noisy settings—think crowded gyms or windy trails—error rates can spike up to 20%. Many devices tout “support for 144 real-time languages,” but real-world testing reveals recognition falters outside core markets or with background noise (Amazon reviews, user reports).

-

Integration Barriers: Cross-platform headaches are still real. If you’re all-in on one ecosystem—iOS with Apple Watch, Android with Samsung or Fitbit—you get the smoothest voice experience. But syncing workouts between Garmin Connect and Apple Health, or transferring routines across platforms, is slow and prone to data loss or duplication. Voice-powered reminders and automations rarely carry over between brands. As the wearables market is projected to hit $303 billion by 2029 (GlobeNewswire), user demand for seamless integration is only going to intensify, but true cross-ecosystem harmony is likely several product cycles away.

What’s Next: Realistic Expectations for the Next 2–3 Years

So, what can users and brands expect by 2027? The trajectory is clear:

-

Voice search will become faster, smarter, and more context-aware, especially on premium models. AI will anticipate not just what you say, but when and why—offering personalized nudges (“You haven’t moved in an hour—want to log a walk?”) and context-driven queries (“Show my last HIIT workout stats”). Expect to see further integration of multimodal inputs, where voice, gesture, and haptic feedback work together for a seamless fitness experience.

-

Adoption will keep climbing, but voice won’t fully replace touch or buttons. While over 50% of all internet searches are expected to be voice-based by 2025, and nearly half of U.S. households already use voice assistants, wearables are a more cautious frontier. Most users will continue to mix voice, swipe, and tap depending on the activity and environment—especially when accuracy drops in noisy or complex scenarios.

-

Persistent limitations will remain for now. Battery constraints, inconsistent privacy protections, and imperfect language support will frustrate some users. Brands that double down on robust on-device AI, nuanced multimodal interfaces, and transparent privacy policies will win loyalty. But don’t expect overnight solutions to cross-platform barriers or universal language fluency.

Bottom Line

If you want the best voice experience on a fitness tracker in the next few years, pick a device that aligns with your phone’s ecosystem and scrutinize its privacy settings. Voice commands will get faster, more useful, and more personalized—great for logging workouts, checking stats, or getting reminders hands-free (think “How’s my heart rate?” mid-run, or “Set a five-minute interval timer” without breaking stride). But keep your charger close, and don’t ditch touch or swipe just yet. The road ahead is promising, but it isn’t pothole-free—brands that combine real-world accuracy, context-aware AI, and transparent privacy will be the ones users trust to guide their health journey, both now and as the wearable landscape continues to evolve.

| Trend / Limitation | Description | Examples / Devices | Impact |

|---|---|---|---|

| On-Device AI | Processes queries locally for faster, more private responses. | Apple S9, Apple Watch Series 10 | Faster response, improved privacy |

| Multimodal Interaction | Combines voice, gesture, visual, and haptic feedback. | Apple Watch Series 10, Demabon, T22 | Seamless, hands-free experience |

| Personalization & Context Awareness | AI learns routines and provides proactive, personalized feedback. | Panasonic Umi, Friend AI, Apple Watch Ultra 2, Garmin Venu 3 | Higher engagement, improved accessibility |

| Battery Life | Voice and GPS drain batteries quickly; solar charging extends life. | Garmin Instinct 2X Solar, Enduro 3, Apple Watch Series 10, Fitbit Charge 6 | Charging needed every 1–3 days (mainstream); up to a month (solar) |

| Privacy & Data Handling | Varies by brand; some process locally, others use cloud with less transparency. | Apple (local), Fitbit & budget Android (cloud) | User trust linked to privacy controls |

| Language & Accent Support | High accuracy for major languages in quiet; struggles with dialects/noise. | Apple, Google, Samsung | Error rates up to 20% in noisy or non-core language settings |

| Integration Barriers | Cross-platform syncing and automations often unreliable. | Apple Watch, Samsung, Fitbit, Garmin Connect | Data loss, duplication, and limited cross-brand automation |

| Future Expectations (2–3 Years) | Faster, context-aware, multimodal voice; persistent battery and integration issues. | All major brands | Greater utility, but no full replacement of touch or universal language support |